Choice+ Survey Protocol: White Paper

This paper is based on the presentation A test of web/PAPI protocols and incentives for the Residential Energy Consumption Survey made at the annual meeting of the American Association for Public Opinion Research in May 2016. RTI International authors are Paul Biemer, Joe Murphy, and Stephanie Zimmer. U.S. Energy Information Administration authors are Chip Berry, Katie Lewis, and Grace Deng.

Acknowledgments

We acknowledge and thank the U.S. Energy Information Administration Office of Energy Consumption and Efficiency Statistics for support of this work. Information and data related to the Residential Energy Consumption Survey (RECS) are available at https://www.eia.gov/consumption/residential/. We also thank Ashley Amaya at RTI International for her valuable assistance with this research.

Overview

Choice Plus (Choice+) is a dual-mode survey protocol that can be applied to an address-based sample (ABS) to maximize response quality and response rate and to minimize costs. It was tested and found to perform best among several other protocols in an experiment embedded in a large-scale survey of the general household population.

Introduction

In surveys that offer multiple mode options for response, one mode may be accessible to and preferable for a majority of respondents while another may be more cost-effective or well-suited to provide high-quality data. In the case of ABS surveys that offer a paper-and-pencil interview (PAPI) option along with a computer-assisted web interview (CAWI) option, respondents may prefer the straightforward task of responding to the PAPI while researchers may prefer that respondents use the CAWI mode to reduce costs and take advantage of the quality features built directly into the instrument. Some respondents do not prefer a CAWI option because they lack web access, have inadequate computer skills, or simply find web response inconvenient. When provided a choice between PAPI and CAWI, we have seen the majority of respondents select PAPI (Biemer et al., 2016). However, when we have provided a differential monetary incentive for respondents to reply by CAWI, we have seen a higher response rate overall and a majority responding by CAWI rather than PAPI. We use the name Choice+ to refer to the protocol that provides a concurrent mode choice but provides extra promised incentive for responding by CAWI because this is the mode that will maximize quality and minimize costs. This paper describes the details of the protocol and an experiment that tested the effectiveness of Choice+ on a general population ABS household survey.

Background

In an ABS design, we have available a mailing address for every sampled unit that can be used as a means of contact, either in person or by mail. Telephone numbers and e-mail addresses may be available from auxiliary sources, but the coverage is far from complete and the accuracy of this information, when available, is not known up front. This means that the only cost-efficient way to maximize contact with units in an ABS sample is by mail.

When inviting households to participate in a survey by mail, two popular options among researchers are PAPI and CAWI. As a survey mode, CAWI offers relative cost-efficiency over PAPI. Once respondents are contacted and agree to participate, there are no costs associated with receipting and manually entering their responses. CAWI also has data quality advantages over PAPI, including programmatic control of branching, questionnaire content, and the range of values that are permissible for responses.

Past research has found that the response rates for single-mode PAPI surveys are higher than for CAWI surveys (Manfreda et al., 2008). In some cases, lack of web access or computer skills is a barrier to participation for a sizable portion of the target population (Millar & Dillman, 2011). Further, many respondents prefer PAPI to CAWI questionnaires given the straightforward nature of completing the task, upfront understanding of the survey’s length, and elimination of extra steps to log in and orient to the website. When we provide the choice of PAPI and CAWI using the same incentives for both options, we have seen the majority of respondents select PAPI (Biemer et al., 2016).

The challenge for researchers in a multi-mode (CAWI and PAPI) design for ABS surveys is how to maximize data quality, response, and the proportion of respondents choosing CAWI while keeping costs low. Apart from simply offering the choice of CAWI or PAPI in the first contact with respondents, two viable alternative options exist:

- Provide only the CAWI option in the first mailing and subsequently offer PAPI as an option for those who do not respond (CAWI/PAPI).

- Provide both options concurrently, but offer a higher promised incentive for CAWI response relative to PAPI response (Choice+).

In the next section, we describe an experiment conducted to test the effectiveness of these approaches.

Experimental Design

We tested the following four data collection protocols:

- CAWI Only—Only the web response option was offered for all survey response invitations.

- CAWI/PAPI—The web response option was offered at the first invitation while both web and paper were offered in all subsequent invitations.

- Choice—Response by either paper or web questionnaire was requested in each survey response invitation.

- Choice+—Response by either paper or web questionnaire was requested in each survey response invitation. However, an additional $10 bonus incentive was promised if the respondent responded by web rather than by paper.

The four protocols were crossed with two incentive treatments. The first was a low-incentive treatment that included a $5 prepaid incentive in the first questionnaire mailing with the promise of an additional $10 for responding to the survey request. The second was a high-incentive treatment that included a $5 prepaid incentive in the first questionnaire mailing with the promise of an additional $20 for responding to the survey request. In each incentive treatment, those in the Choice+ protocol were promised an additional $10 to respond by CAWI rather than by PAPI (i.e., Choice+ low-incentive treatment: $10 promised for PAPI, $20 for CAWI; Choice+ high-incentive treatment: $20 promised for PAPI, $30 for CAWI.) Differences by incentive treatments were similar across protocols, and we report here only the overall protocol results.

We compared the protocols with regards to their costs, response rates, consistency with benchmark estimates in other large-scale surveys, and the respondent’s choice of CAWI versus PAPI response. The data are from the Residential Energy Consumption Survey (RECS) National Pilot, an experimental study conducted concurrently with the official 2015 RECS.

The National Pilot web and mail instruments were designed to be completed in 30 minutes. The target population for the National Pilot is all housing units occupied as primary housing units in the United States. Vacant homes, seasonal housing units, and group quarters, such as dormitories, nursing homes, prisons, and military barracks, are excluded from the study. However, housing units on military installations are included. A total of 9,650 housing units were included in the National Pilot sample with equal proportions assigned to each experimental protocol.

The National Pilot sought to achieve the following four objectives:

- Maximize the overall response rate as well as the response rates for key housing unit domains.

- Achieve a balanced distribution of respondents based on similarity with benchmark distributions from the American Community Survey (ACS).

- Maximize the respondents’ use of the web questionnaire response rather than paper to

- reduce the costs for printing questionnaires, return postage, and the labor associated with mail receipting, data entry, and data review; and

- eliminate certain types of item nonresponse, out-of-range responses, branching errors and inconsistent responses.

- Further reduce costs by encouraging response (by either web or paper) earlier in the data collection period, which would avoid repeated mailings, and their associated costs.

In addition to testing the more traditional single-mode (CAWI), concurrent (Choice), and sequential (CAWI/PAPI) designs, we were interested to see if the Choice+ design, with its additional promised incentive, could effectively push respondents to complete the preferred mode.

Results of the Experiment

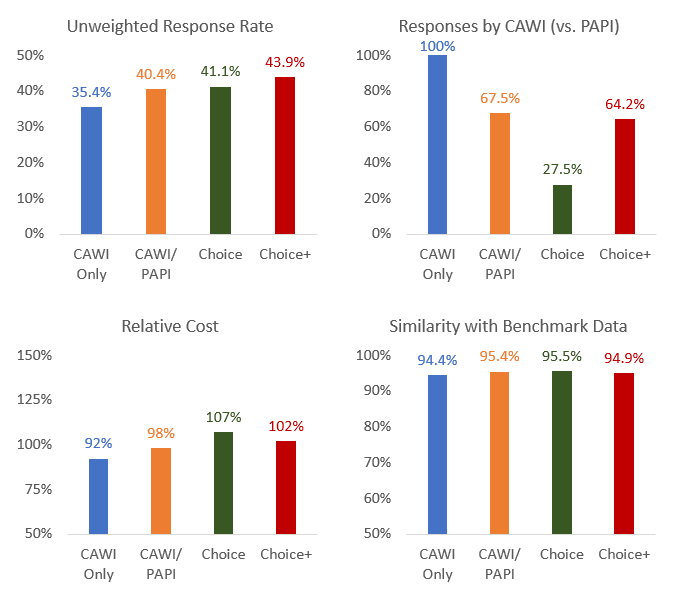

Figure 1 presents the key outcomes of the experiment: response rates1, percentage responding by CAWI (vs. PAPI), the relative cost of each protocol, and similarity with the ACS benchmark data for key survey estimates. The Choice+ protocol achieved the highest response rate overall at 43.9% (statistically significantly higher than CAWI/PAPI at p=.05). While only 27.5% of respondents under the Choice protocol selected the CAWI option, this effect was reversed for the Choice+ protocol with 64.2% choosing to respond by web.

To compare total costs separately by treatment, we totaled all relevant fixed and variable costs of materials, labor, printing, incentives, and postage for the mailing of the questionnaires and follow-up contacts as well as the costs of keying2 the paper and electronic questionnaires. Figure 1 shows these results as a ratio of the total cost for a given protocol to the average total costs across protocols. The Choice+ protocol was only 2% above the average and 4% higher than the CAWI/PAPI.

Finally, we considered the representativeness of the responding sample as measured by comparing the distributions of National Pilot responses with the corresponding weighted 2014 ACS national distributions for 12 variables that are common to both surveys. For this analysis, we treat the ACS data as the gold standard; thus, differences between the National Pilot and the ACS are interpreted as indications of bias in the National Pilot.

Because the variables considered in this analysis were categorical, we chose

the dissimilarity index (d) as the indicator of unrepresentativeness, where

.

Here,

.

Here,  and

and  are the National Pilot and ACS proportions for each of the 12 variables

in the kth category, respectively. This measure can be interpreted as the

proportion of observations that would need to change categories in the

National Pilot to achieve perfect agreement with the ACS. In that sense,

1-d may be regarded as the similarity rate between the two surveys. A value

of 1-d from 90% to 95% is usually regarded as “good” agreement, and a value

of 1-d > 95% is usually regarded as “very good” agreement. We found Choice+,

along with all other protocols, to fall in the “good” to “very good” range

of similarity with the ACS.

are the National Pilot and ACS proportions for each of the 12 variables

in the kth category, respectively. This measure can be interpreted as the

proportion of observations that would need to change categories in the

National Pilot to achieve perfect agreement with the ACS. In that sense,

1-d may be regarded as the similarity rate between the two surveys. A value

of 1-d from 90% to 95% is usually regarded as “good” agreement, and a value

of 1-d > 95% is usually regarded as “very good” agreement. We found Choice+,

along with all other protocols, to fall in the “good” to “very good” range

of similarity with the ACS.

Conclusion

Taken together, one can compare the results of the survey protocols along multiple dimensions to make a decision about which is best for the survey objectives. For our purposes, the Choice+ protocol, a concurrent dual-mode method with extra incentive for the web response, excelled on dimensions deemed most important for the future RECS. Choice+ had the highest response rate at both low and high incentive levels and maintained the highest submission rate throughout data collection. This suggests that the protocol may be more robust to incentive levels than the traditional concurrent option (i.e., Choice) or the sequential CAWI/PAPI method. For the criteria examined in this study, the Choice+ protocol scored substantially better than the Choice protocol, and at least as good as the CAWI/PAPI, particularly at eliciting response via the web.

In all, these results suggest that mixed-mode mail surveys can serve as a viable platform for national household data collections, such as the RECS. Our analysis indicates that the Choice+ strategy, in particular, is an effective method for simultaneously increasing both web and overall survey response.

Further research is needed to repeat the Choice+ experiment and conduct additional experiments with variations on these strategies (e.g., using smaller promised incentives for web response or a smaller prepaid incentive). Additional criteria, such the effects of the protocol on measurement error and item nonresponse, are also important to examine and weigh against the other factors in selecting the most appropriate data collection protocol.

Works Cited

- American Association for Public Opinion Research (AAPOR). (2016). Standard definitions final dispositions of case codes and outcome rates for surveys. http://www.aapor.org/AAPOR_Main/media/publications/Standard-Definitions20169theditionfinal.pdf

- Biemer, P., Murphy, J., Zimmer, S., Berry, C., Lewis, K., & Deng, G. (2016). A test of web/PAPI protocols and incentives for the Residential Energy Consumption Survey. Presented at the annual meeting of the American Association for Public Opinion Research, Austin, TX. http://www.aapor.org/AAPOR_Main/media/AnnualMeetingProceedings/2016/E6-5-Biemer.pdf

- Manfreda, K., Lozar, M. B., Berzelak, J., Haas, I., & Vehovar, V. (2008). Web surveys versus other survey modes: A meta-analysis comparing response rates. International Journal of Market Research, 50, 79–104. https://www.mrs.org.uk/ijmr/archive

- Millar, M. M., & Dillman, D. A. (2011). Improving response to web and mixed-mode surveys. Public Opinion Quarterly 75(2), 249–269. https://academic.oup.com/poq/article/75/2/249/1860211/Improving-Response-to-Web-and-Mixed-Mode-Surveys

Footnotes

- AAPOR Response Rate 3 (AAPOR, 2016).

- In some surveys, using scanned forms (e.g., via TeleForm) could save data entry costs and thus change the cost comparisons. For the National Pilot, scanned forms were considered but were deemed to be cost-inefficient.